4K UHD vs HDR Differences in Quality, Gaming, Hardware, etc.

You may have heard a lot of the resolution buzzword 4K. But when you go shopping for a TV, computer, or camera, playing games, or streaming Netflix, you'll see everyone talking about another word - HDR. That's where confusion begins. Is 4K UHD and HDR the same thing? No, but both are important technologies used to improve the visual quality. So what’s the difference between 4K UHD and HDR?

Convert 4K UHD HDR Videos with Best Quality

Winxvideo AI is able to convert 4K UHD HDR videos in HEVC, MP4, MKV, MOV, AVI, WebM, and any other formats. Its high quality engine can reserve the best output quality. The GPU acceleration ensures a speed that is about 5x faster than others. Enhance 4K UHD HDR videos with AI upscaler, download, edit, record, compress, and more features.

4K UHD and HDR detailed differences

Part 1. What Are 4K UHD and HDR?

What is 4K UHD?

4K refers to the resolution of a TV that's four times the number of pixels than a standard 1080P Full HD TV. Instead of the more pixelated images of lower quality screens like 1080p or 720p, 4K televisions produce a clearer, more vivid and life-like picture thanks to its more pixels. The 4K label is typically ascribed to two different screen resolutions:

- The most common Ultra HD or UHD format means 3,840 horizontal pixels by 2160 vertical pixels.

- The digital cinema 4K is slightly higher at 4,096 by 2,160.

What is HDR?

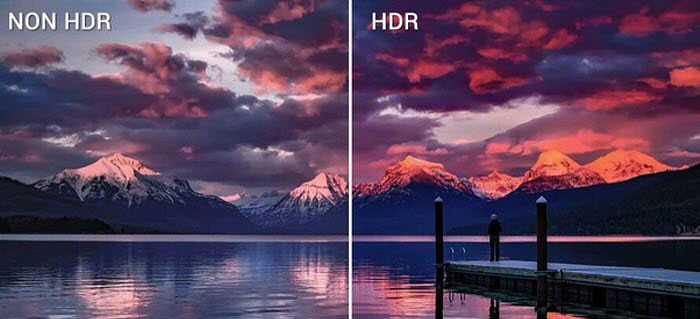

HDR (high dynamic range) refers to the range of color and contrast between the lightest and darkest tone in a digital image. HDR content stores wider and more granular values of brightness, darkness, and color levels than standard dynamic range content, which lets TVs display brighter brights and darker darks.

- HDR10 – The most common HDR standard available in streaming content and UHD Blu-ray.

- HDR10+ – An improved HDR10 format from Samsung.

- Dolby Vision – An enhanced version of HDR10 which is less popular than HDR10.

- HLG – A broadcast friendly HDR format from BBC and NHK.

Quick Comparison between 4K and HDR

4K UHD |

HDR |

|

|

Part 2. 4K UHD vs HDR Difference in Picture Quality

4K UHD

A picture is made up of tiny blocks of color called pixel. Pixels are arranged in a grid on computer and TV screens, each can light up at different brightness. If you stand close enough to your screen, you will be able to see individual pixel blocks. Then, when you stand back, the pixels will come together to form an image.

4K resolution has at least 9 million active pixels, 4 times the number of pixels on a 1080P display and over 23 times the resolution of standard definition. Having four times as many as pixels means the density of pixels for a given area goes higher and the pixel blocks become smaller. Then the images get sharper and more dynamic colors are provided. Unlike more pixelated images of lower resolutions like 1080P, the smoother edges and greater visual depth in 4K images gives viewers the feeling that they're looking right out a window.

HDR

Different to Ultra HD 4K, HDR has nothing to do with resolution. It's a method of getting more details into both the brightest and the darkest elements of a picture. For example, when you shot a picture under sunlight, the image will be washed out and white without HDR; while if you shift the camera focus to somewhere dark, then the bright parts will become a blown-out.

HDR goes hand in hand with WCG (Wide-Color Gamut) to bring video fidelity close to what human eyes actually see. It involves a process called color grading which will smooth out harsh shadows, enhance colors, etc. With HDR, the contrast in lighter & darker environments is more diverse and allows you to distinguish elements better without producing blooming and washouts, or muddy and blurred colors. Therefore, you can see the real colors of things like highlights, reflections, sunshine, shadows, etc.

Also see detailed differences between HDR vs SDR

Part 3. 4K UHD vs HDR in Gaming

4K gaming has been a mainstream on PC for a while. Once you have a high-performance GPU and a 4K monitor, you can play AAA games in 4K resolution. 4K support also comes to consoles with Sony and Microsoft's gaming machines. Xbox One S and PS4 Pro are two consoles from the two companies with upscaling capability to generate 4K UHD visuals. Now you can get more advanced 4K gaming on the latest Xbox Sereies X and PS5, both of which support native 4K HDR at frame rates of up to 120Hz. The next-gen Nintendo Switch 2 is capable of 4K gaming too.

Compared to 4K gaming, HDR has been increasing more common in AAA games. It's long been used in gaming to make pictures more realistic and immersive. Back in 2016, Microsoft released Xbox One S, the first HDR-compatible console. PlayStation then followed the pace by releasing 4K- and HDR-compatible PS4 Pro, and later updated its standard PS4 console with HDR support. Now, Microsoft is planning to automatically add HDR support to more than 1,000 PC games.

Part 4. 4K UHD vs HDR in Hardware Requirements

4K UHD

As awesome as 4K video looks, it's hard to beat watching movies, playing games, or working at 4K. However, the increased resolution and bit rate pose a tremendous challenge to workstations. The good news is that most modern hardware is capable to handle 4K content. Your hardware should at least meet the following requirements:

- GPU – At least GeForce GTX 960 on desktop and GTX 980M on netbook. Anything less than a GTX 1080 Max-Q isn’t going to do well in AAA games. For 4K @60fps, you’d better have a GPU as powerful as RTX3000 series (here is a list of best GPU for 4K gaming).

- CPU – Newer Intel CPUs, at least an 8th chip with Core i5 or above. Or the latest AMD CPU Ryzen 3000 series.

- Monitor – At least 27-inch 4K display with at least 60fps and HDCP 2.2.

- Cable – HDMI 1.4 to support 4K @60fps or HDMI or higher for 4K @60fps. Or use a DisplayPort Cable.

HDR

Similar to 4K Ultra HD, HDR also places high demand on the hardware. To display HDR correctly, your workstation pipeline should all support HDR. This might include GPU, output device, cables, etc.

- GPU – At least Nvidia GTX 950 or AMD Radeon R9 380. Or at least a 7th-generation Kaby Lake CPU for Intel's integrated graphics.

- Monitor – A HDR TV or monitor with a max brightness of at least 300 nits.

- Cable - DisplayPort 1.4 or HDMI 2.0 or higher (for HDR 4K at up to 60fps).

Conclusion

In summary, 4K UHD referrs to the resolution of the display (aka. number of pixels) while HDR involves in the image parameters like color, brightness, contrast, etc. They are two different aspects of displays. Of the two, 4K HDR leves up the viewing experience, especially for those who work with computers, graphic design, architects, etc.